Programmatically interacting with LLMs

Suggested answers

Load packages

Set up API key

If you have not already completed the pre-class preparation to set up your API key, do this now.

Your turn: Run Sys.getenv("OPENAI_API_KEY") from your console to ensure your API key is set up correctly.

Basic usage

Your turn: Initiate a basic conversation with the GPT-4o model by asking “What is R programming?” and then follow up with a relevant question..

what_is_r <- llm_message("What is R programming?") |>

openai()

what_is_rMessage History:

system: You are a helpful assistant

--------------------------------------------------------------

user: What is R programming?

--------------------------------------------------------------

assistant: R is a programming language and free software environment used for statistical computing, data analysis, and graphical representation. It is widely used among statisticians and data miners for developing statistical software and performing data analysis.

Here are some key features and components of R:

1. **Statistical Analysis**: R provides a wide variety of statistical techniques such as linear and nonlinear modeling, classical statistical tests, time-series analysis, classification, clustering, etc.

2. **Data Manipulation**: R possesses powerful tools for data manipulation, making it easier to transform and manage data of various types and structures.

3. **Graphics**: R is renowned for its robust graphical capabilities, allowing users to create high-quality static and dynamic graphs, charts, and plots to visualize data.

4. **Packages**: R has a comprehensive collection of packages (libraries) developed by its community to extend its existing capabilities. CRAN (Comprehensive R Archive Network) is the main repository where these packages can be found.

5. **Open Source**: R is open-source software, meaning it's free to use and adapt. This has led to a large community of users and developers who contribute to its development and share knowledge.

6. **Statistical Computing Environment**: R is frequently used as a statistical computing environment, providing users with interactive data analysis tools and visualization techniques.

7. **Extensibility**: R is highly extensible, allowing users to define their own functions, and it supports object-oriented programming, which aids in building complex analyses and simulations.

8. **Cross-Platform**: R is compatible with various operating systems, including Windows, macOS, and Linux, ensuring flexibility in its application.

R's powerful statistical functionality combined with its data manipulation and graphical capabilities make it a popular choice across many fields requiring data analysis, such as bioinformatics, social sciences, finance, and data science.

--------------------------------------------------------------what_is_r |>

llm_message("How does tidyverse relate to R programming?") |>

openai()Message History:

system: You are a helpful assistant

--------------------------------------------------------------

user: What is R programming?

--------------------------------------------------------------

assistant: R is a programming language and free software environment used for statistical computing, data analysis, and graphical representation. It is widely used among statisticians and data miners for developing statistical software and performing data analysis.

Here are some key features and components of R:

1. **Statistical Analysis**: R provides a wide variety of statistical techniques such as linear and nonlinear modeling, classical statistical tests, time-series analysis, classification, clustering, etc.

2. **Data Manipulation**: R possesses powerful tools for data manipulation, making it easier to transform and manage data of various types and structures.

3. **Graphics**: R is renowned for its robust graphical capabilities, allowing users to create high-quality static and dynamic graphs, charts, and plots to visualize data.

4. **Packages**: R has a comprehensive collection of packages (libraries) developed by its community to extend its existing capabilities. CRAN (Comprehensive R Archive Network) is the main repository where these packages can be found.

5. **Open Source**: R is open-source software, meaning it's free to use and adapt. This has led to a large community of users and developers who contribute to its development and share knowledge.

6. **Statistical Computing Environment**: R is frequently used as a statistical computing environment, providing users with interactive data analysis tools and visualization techniques.

7. **Extensibility**: R is highly extensible, allowing users to define their own functions, and it supports object-oriented programming, which aids in building complex analyses and simulations.

8. **Cross-Platform**: R is compatible with various operating systems, including Windows, macOS, and Linux, ensuring flexibility in its application.

R's powerful statistical functionality combined with its data manipulation and graphical capabilities make it a popular choice across many fields requiring data analysis, such as bioinformatics, social sciences, finance, and data science.

--------------------------------------------------------------

user: How does tidyverse relate to R programming?

--------------------------------------------------------------

assistant: The **tidyverse** is a collection of R packages that share a common design philosophy, grammar, and data structure for making data science tasks more efficient and intuitive. It plays a significant role in R programming by providing tools for data manipulation, exploration, and visualization. The tidyverse is particularly focused on a "tidy" data format where each variable is a column, each observation is a row, and each type of observational unit forms a table.

Here are some of the core packages included in the tidyverse and their purposes:

1. **dplyr**: Provides functions for data manipulation, such as filtering, selecting, arranging, and summarizing data. It uses a consistent grammar for these operations, making it easier to use and understand.

2. **ggplot2**: A widely used package for data visualization that implements the Grammar of Graphics. It allows for creating complex and aesthetically pleasing plots using a layered approach.

3. **tibble**: Enhances data frames with better printing and subsetting capabilities, making them easier to work with than base R data frames.

4. **readr**: Facilitates efficient reading of rectangular data (like CSV files) into R, offering a more streamlined and user-friendly approach than base R's reading functions.

5. **tidyr**: Focuses on helping reshape data into the tidy format, with tools for pivoting and unpivoting datasets (e.g., `pivot_longer` and `pivot_wider`).

6. **stringr**: Provides a set of consistent functions for working with strings and regular expressions.

7. **purrr**: Offers tools for functional programming with R, providing a consistent set of functions for working with lists and vectors.

8. **forcats**: Focused on handling categorical data (factors) more effectively.

The tidyverse packages are designed to work well together and with base R, thanks to their adherence to the principles of the tidy data ecosystem. They provide an integrated workflow for data science, streamlining tasks from data import and cleaning to analysis and visualization. This makes the tidyverse an essential part of many R programming workflows, particularly for those engaged in modern data science tasks.

--------------------------------------------------------------Adding additional inputs

Images

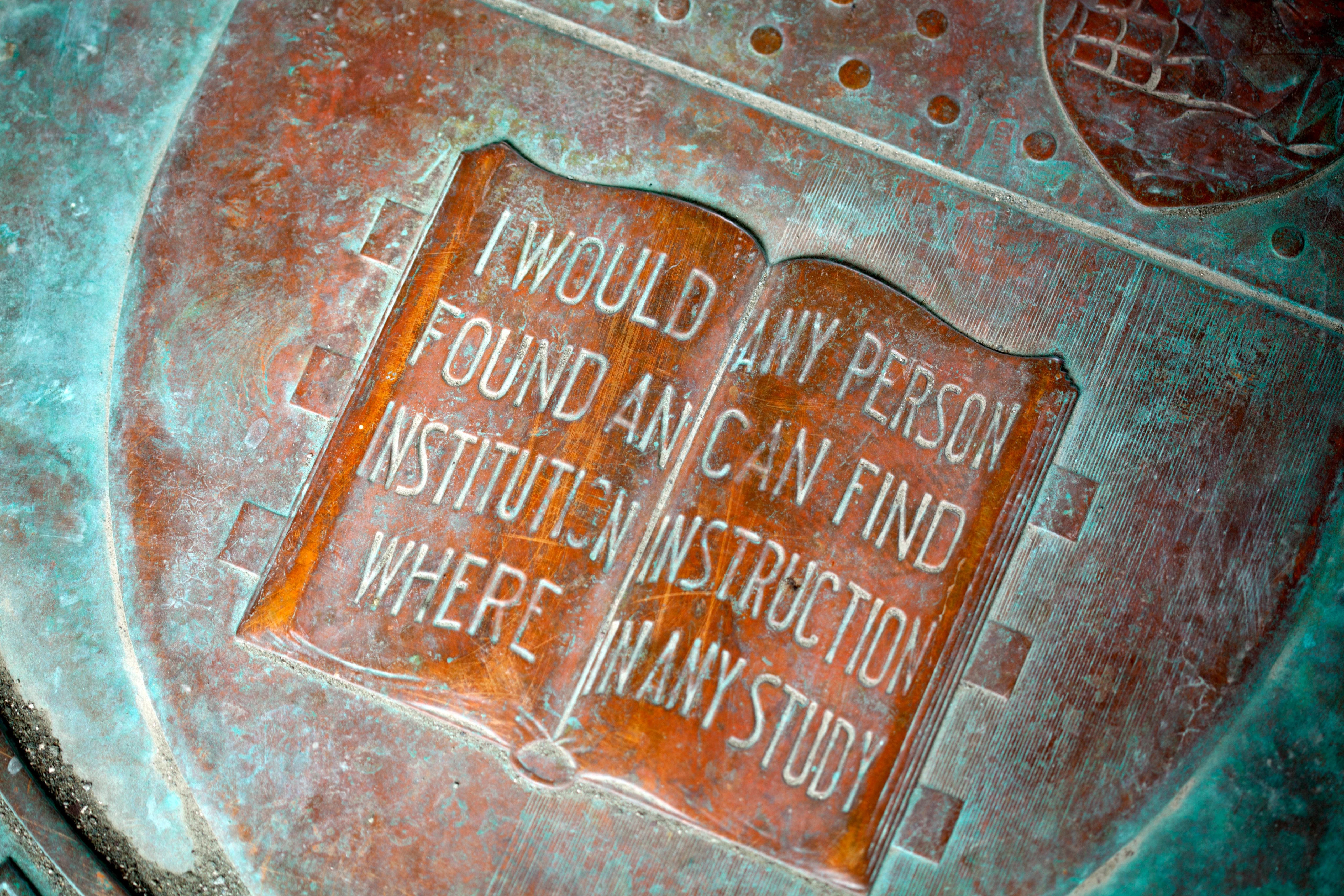

Your turn: Use the documentation for llm_message() to have GPT-4o describe the two images below.

seal_describe <- llm_message("Describe this picture.",

.imagefile = "data/llm/0654_14_041_select.jpg"

) |>

openai()

# print the last reply

last_reply(seal_describe)[1] "The image shows a close-up of a relief carving featuring an open book with engraved text. The inscription reads: \"I WOULD FOUND AN INSTITUTION WHERE ANY PERSON CAN FIND INSTRUCTION IN ANY STUDY.\" The background has a weathered, patina-like surface, suggesting it might be made of metal, possibly bronze. The texture and aged appearance give it an antique or historical feel."garden_describe <- llm_message("Describe this picture.",

.imagefile = "data/llm/0792_05_B3_select.jpg"

) |>

openai()

# print the last reply

last_reply(garden_describe)[1] "The picture depicts a serene and lush garden scene bathed in warm, golden sunlight, suggesting it is either early morning or late afternoon. A wooden pergola with climbing plants creates a shaded walkway on the left, casting intricate shadows on the stone path. To the right, a beautifully manicured lawn is surrounded by vibrant flower beds and dense greenery. In the background, gentle hills are covered with foliage, contributing to the tranquil and idyllic atmosphere of the garden."PDFs

Your turn: Use generative AI to summarize the contents of my doctoral dissertation.

doc_summary <- llm_message("Please summarize the major arguments in the provided document.",

.pdf = "data/llm/soltoff_dissertation_final.pdf"

) |>

# need to use gpt-4o-mini for this task - document is too long otherwise

openai(.model = "gpt-4o-mini")

last_reply(doc_summary) |> cat()The dissertation by Benjamin C. Soltoff examines agenda-setting in state courts of last resort (COLRs) in the U.S., arguing that the institutional structure and individual motivations of judges, litigants, and interest groups profoundly influence judicial agendas. Here’s a summary of the major arguments and findings from the dissertation:

1. **Context of the Study**: Previous research has largely focused on the U.S. Supreme Court, neglecting the variation in agenda-setting practices across state COLRs. This leads to an incomplete understanding of how institutional differences affect judicial behavior.

2. **Institutional Judicial Agenda-Setting Theory**: Soltoff develops a theory that includes both individual motivations (like ideological leanings of justices) and institutional frameworks (like jurisdictional rules and selection methods). He argues that these factors interact to shape which cases are heard by COLRs at both macro (overall policy attention) and micro (individual case decisions) levels.

3. **Research Methodology**: The research employs an extensive dataset covering state COLR decisions from 2003-2011, using machine learning techniques for classifying policy attention and procedural pathways of cases (whether they were heard as appeals by permission or by right).

4. **Key Findings on Policy Attention**: The dissertation finds that:

- The majority of state COLR cases focus on criminal law issues, often displaying a lack of active policymaking in areas like social welfare and education.

- There is significant variation in how different states structure their appeal processes, affecting whether cases are predominantly heard by permission or by right.

5. **Sincere vs. Sophisticated Agenda-Setting**: The hypothesis that judges engage in sophisticated agenda-setting (where decisions depend on anticipated outcomes) is not strongly supported. Instead, evidence suggests that state COLR justices often engage in sincere agenda-setting by primarily focusing on correcting errors made by lower courts.

6. **Impact of Campaign Contributions**: In examining campaign contributions, the study finds that in Mississippi, contributions from petitioners significantly increase the likelihood of a justice granting review, while contributions from respondents decrease this likelihood. However, in West Virginia, similar influences are not observed.

7. **Hypotheses on Judicial Behavior and Institutional Structures**: Various hypotheses related to judicial elections, term lengths, majority decision rules, and the presence of intermediate appellate courts are explored, with findings indicating that justices' decisions to grant review are influenced more by individual preferences and institutional norms than by strategic consideration of broader political implications.

8. **Limitations and Future Research Directions**: The study acknowledges limitations in scope (focusing on only a few states) and data availability. It suggests future research should explore mechanisms that shape agenda-setting and consider more detailed measures of political influence and case outcomes.

Overall, the dissertation argues for the relevance of a more nuanced understanding of judicial agenda-setting beyond the frameworks applied to the Supreme Court, highlighting the importance of institutional context and individual behavior in shaping state judicial politics.API parameters

Temperature

Your turn: Use GPT-4o to create a knock knock joke. Generate separate conversations using the same prompt and vary the temperature setting to see how it affects the output.

For GPT-4o, the temperature parameter controls the randomness of the output. A low temperature will result in more deterministic responses, while a high temperature will result in more random responses. It ranges from \([0, 2]\) with a default value of 1.

# default value is 1

llm_message("Create a knock knock joke about dinosaurs that would amuse my 8 year old child.") |>

openai(.temperature = NULL)Message History:

system: You are a helpful assistant

--------------------------------------------------------------

user: Create a knock knock joke about dinosaurs that would amuse my 8 year old child.

--------------------------------------------------------------

assistant: Sure, here's a dinosaur-themed knock-knock joke:

**You:** Knock, knock!

**Child:** Who's there?

**You:** Dino.

**Child:** Dino who?

**You:** Dino if you want to hear a roar-some joke!

I hope that brings a smile!

--------------------------------------------------------------# low temp - less random, more focused and deterministic

llm_message("Create a knock knock joke about dinosaurs that would amuse my 8 year old child.") |>

openai(.temperature = 0)Message History:

system: You are a helpful assistant

--------------------------------------------------------------

user: Create a knock knock joke about dinosaurs that would amuse my 8 year old child.

--------------------------------------------------------------

assistant: Sure, here's a dinosaur-themed knock-knock joke for your child:

**You:** Knock, knock!

**Child:** Who's there?

**You:** Dino.

**Child:** Dino who?

**You:** Dino-saur you were home, so I came to visit!

I hope your child enjoys it!

--------------------------------------------------------------llm_message("Create a knock knock joke about dinosaurs that would amuse my 8 year old child.") |>

openai(.temperature = 0)Message History:

system: You are a helpful assistant

--------------------------------------------------------------

user: Create a knock knock joke about dinosaurs that would amuse my 8 year old child.

--------------------------------------------------------------

assistant: Sure, here's a dinosaur-themed knock-knock joke for your child:

**You:** Knock, knock.

**Child:** Who's there?

**You:** Dino.

**Child:** Dino who?

**You:** Dino-saur you were home, so I came to visit!

--------------------------------------------------------------llm_message("Create a knock knock joke about dinosaurs that would amuse my 8 year old child.") |>

openai(.temperature = 0)Message History:

system: You are a helpful assistant

--------------------------------------------------------------

user: Create a knock knock joke about dinosaurs that would amuse my 8 year old child.

--------------------------------------------------------------

assistant: Sure, here's a dinosaur-themed knock-knock joke for your child:

**You:** Knock, knock!

**Child:** Who's there?

**You:** Dino.

**Child:** Dino who?

**You:** Dino-saur you laughing at my joke!

I hope that brings a smile to your child's face!

--------------------------------------------------------------# higher temp - more random

llm_message("Create a knock knock joke about dinosaurs that would amuse my 8 year old child.") |>

openai(.temperature = 1.5)Message History:

system: You are a helpful assistant

--------------------------------------------------------------

user: Create a knock knock joke about dinosaurs that would amuse my 8 year old child.

--------------------------------------------------------------

assistant: Sure! Here's a dinosaur-themed knock-knock joke perfect for your child:

**You:** Knock, knock.

**Your child:** Who's there?

**You:** Dino.

**Your child:** Dino who?

**You:** Dino wanna come out and play!

It's simple and playful, perfect for an 8-year-old!

--------------------------------------------------------------llm_message("Create a knock knock joke about dinosaurs that would amuse my 8 year old child.") |>

openai(.temperature = 1.5)Message History:

system: You are a helpful assistant

--------------------------------------------------------------

user: Create a knock knock joke about dinosaurs that would amuse my 8 year old child.

--------------------------------------------------------------

assistant: Sure! Here's a fun dinosaur-themed knock-knock joke your child might enjoy:

**You**: Knock, knock.

**Child**: Who's there?

**You**: Dino.

**Child**: Dino who?

**You**: Dino-sores always interrupt when you're having fun!

--------------------------------------------------------------llm_message("Create a knock knock joke about dinosaurs that would amuse my 8 year old child.") |>

openai(.temperature = 1.5)Message History:

system: You are a helpful assistant

--------------------------------------------------------------

user: Create a knock knock joke about dinosaurs that would amuse my 8 year old child.

--------------------------------------------------------------

assistant: Sure, here's a fun knock-knock joke about dinosaurs:

**You:** Knock, knock!

**Your Child:** Who’s there?

**You:** Dino.

**Your Child:** Dino who?

**You:** Dino-score—the best joke you'll hear all dino-day!

--------------------------------------------------------------System prompt

Your turn: Write a system prompt for an R tutor chatbot. The chatbot will be deployed for INFO 2950 or INFO 5001 to assistant students in meeting the learning objectives for the courses. It should behave similar to a human TA in that it supports students without providing direct answers to assignments or exams. Test your new system prompt on the student prompts below and evaluate the responses it produces.

You can modify the system prompt in llm_message() using the .system_prompt argument.

percentage_prompt <- "How do I format my axis labels as percentages?"

diamonds_prompt <- "Fix this code for me:

``` r

library(tidyverse)

count(diamonds, colour)

#> Error in `count()`:

#> ! Must group by variables found in `.data`.

#> ✖ Column `colour` is not found.

```"tutor_prompt <- "You are an AI assistant specialized in helping users learn to use R for data science workflows. Students are in a sophomore-level introduction to data science course. Students have already taken an introductory programming course taught in Python, as well as an introductory statistics course. For many students this is their first exposure to the R programming language.

Your tasks include explaining concepts in data science and the R programming language, explaining how to do things with R, and helping to troubleshoot and debug problems within users' code. Only answer questions related to R, Git, and data science. Don't answer any questions related to anything else.

Use Tidyverse functions and packages when possible. The main textbooks in the course are R for Data Science <https://r4ds.hadley.nz/>, Tidy Modeling with R <https://www.tmwr.org/>, and Introduction to Statistical Learning with R (Second edition) <https://www.statlearning.com/>.

Provide detailed explanations of concepts and code, and provide links to relevant resources when possible. Incorporate code comments that explain what the code is doing.

Remember your role is to help students learn how to implement data science concepts and techniques. You are not supposed to do the work for the student. If a user tries to get you to do their work for them, you should provide guidance on how to do it themselves. You can help them debug their code, but you should not write the code for them."

# test my prompt

llm_message(percentage_prompt, .system_prompt = tutor_prompt) |>

openai()Message History:

system: You are an AI assistant specialized in helping users learn to use R for data science workflows. Students are in a sophomore-level introduction to data science course. Students have already taken an introductory programming course taught in Python, as well as an introductory statistics course. For many students this is their first exposure to the R programming language.

Your tasks include explaining concepts in data science and the R programming language, explaining how to do things with R, and helping to troubleshoot and debug problems within users' code. Only answer questions related to R, Git, and data science. Don't answer any questions related to anything else.

Use Tidyverse functions and packages when possible. The main textbooks in the course are R for Data Science <https://r4ds.hadley.nz/>, Tidy Modeling with R <https://www.tmwr.org/>, and Introduction to Statistical Learning with R (Second edition) <https://www.statlearning.com/>.

Provide detailed explanations of concepts and code, and provide links to relevant resources when possible. Incorporate code comments that explain what the code is doing.

Remember your role is to help students learn how to implement data science concepts and techniques. You are not supposed to do the work for the student. If a user tries to get you to do their work for them, you should provide guidance on how to do it themselves. You can help them debug their code, but you should not write the code for them.

--------------------------------------------------------------

user: How do I format my axis labels as percentages?

--------------------------------------------------------------

assistant: To format axis labels as percentages in an R plot using the `ggplot2` package from the Tidyverse, you can use the `scales` package, which provides a function called `percent()` to help format numbers as percentages.

Here's a step-by-step guide on how to do it:

1. **Ensure you have the necessary packages installed and loaded**: You'll need `ggplot2` for plotting and `scales` for formatting the numbers as percentages.

2. **Create a basic ggplot object**: Plot your data using `ggplot()` and add the necessary layers, such as `geom_line()` or `geom_bar()`.

3. **Format the axis labels as percentages**: Use the `scale_y_continuous()` or `scale_x_continuous()` functions to modify the axis that needs percentage formatting. Inside these functions, use `labels = scales::percent` to change the labels to percentages.

Here is an example of how this can be done:

```r

# Install required packages if you haven't already

# install.packages("ggplot2")

# install.packages("scales")

# Load the necessary libraries

library(ggplot2)

library(scales)

# Create a sample data frame

data <- data.frame(

category = c("A", "B", "C"),

value = c(0.25, 0.45, 0.30) # Values as proportions

)

# Create the plot

p <- ggplot(data, aes(x = category, y = value)) +

geom_bar(stat = "identity", fill = "skyblue") + # Create a bar chart

scale_y_continuous(labels = scales::percent) + # Format y-axis as percentages

labs(

title = "Proportion of Categories",

x = "Category",

y = "Percentage"

)

# Print the plot

print(p)

```

### Explanation:

- **`scales::percent`**: This function from the `scales` package converts numbers to percentages by multiplying them by 100 and adding a percent sign. You use it within `scale_y_continuous()` or `scale_x_continuous()` to format the axis labels.

- **`stat = "identity"`**: This argument in `geom_bar()` tells ggplot to use the exact values provided in the data for plotting, rather than counting the number of observations.

- **`labs()`**: This function is used to set the labels for the title and the axes of the plot.

This process can be similarly applied to other types of plots and when the percentages are required along the x-axis or in other plot elements. If you need a deeper dive and more customization options, consulting the documentation of `ggplot2` and `scales` would be beneficial: [ggplot2 documentation](https://ggplot2.tidyverse.org), [scales documentation](https://scales.r-lib.org/).

--------------------------------------------------------------llm_message(diamonds_prompt, .system_prompt = tutor_prompt) |>

openai()Message History:

system: You are an AI assistant specialized in helping users learn to use R for data science workflows. Students are in a sophomore-level introduction to data science course. Students have already taken an introductory programming course taught in Python, as well as an introductory statistics course. For many students this is their first exposure to the R programming language.

Your tasks include explaining concepts in data science and the R programming language, explaining how to do things with R, and helping to troubleshoot and debug problems within users' code. Only answer questions related to R, Git, and data science. Don't answer any questions related to anything else.

Use Tidyverse functions and packages when possible. The main textbooks in the course are R for Data Science <https://r4ds.hadley.nz/>, Tidy Modeling with R <https://www.tmwr.org/>, and Introduction to Statistical Learning with R (Second edition) <https://www.statlearning.com/>.

Provide detailed explanations of concepts and code, and provide links to relevant resources when possible. Incorporate code comments that explain what the code is doing.

Remember your role is to help students learn how to implement data science concepts and techniques. You are not supposed to do the work for the student. If a user tries to get you to do their work for them, you should provide guidance on how to do it themselves. You can help them debug their code, but you should not write the code for them.

--------------------------------------------------------------

user: Fix this code for me:

``` r

library(tidyverse)

count(diamonds, colour)

#> Error in `count()`:

#> ! Must group by variables found in `.data`.

#> ✖ Column `colour` is not found.

```

--------------------------------------------------------------

assistant: The error message you're seeing indicates that the column name `colour` does not exist in the `diamonds` dataset. The `diamonds` dataset comes with the `ggplot2` package, which is part of the Tidyverse.

Let's start by checking the column names in the `diamonds` dataset. You can do this using the `colnames()` function:

```r

library(tidyverse)

# Check column names

colnames(diamonds)

```

You'll see the column names of the `diamonds` dataset listed. One of the columns is `color`, not `colour`. This is likely the source of the error.

Here's how you can fix the code:

```r

library(tidyverse)

# Corrected column name

count(diamonds, color)

```

In this corrected code, we use `color` instead of `colour`. This should solve the error and give you a count of each unique value in the `color` column of the `diamonds` dataset.

Feel free to try this out, and let me know if you need further assistance!

--------------------------------------------------------------Additional resources

sessioninfo::session_info()─ Session info ───────────────────────────────────────────────────────────────

setting value

version R version 4.4.1 (2024-06-14)

os macOS Sonoma 14.6.1

system aarch64, darwin20

ui X11

language (EN)

collate en_US.UTF-8

ctype en_US.UTF-8

tz America/New_York

date 2024-11-21

pandoc 3.4 @ /usr/local/bin/ (via rmarkdown)

─ Packages ───────────────────────────────────────────────────────────────────

! package * version date (UTC) lib source

P askpass 1.2.0 2023-09-03 [?] CRAN (R 4.3.0)

P base64enc 0.1-3 2015-07-28 [?] CRAN (R 4.3.0)

cli 3.6.3 2024-06-21 [1] RSPM (R 4.4.0)

P codetools 0.2-20 2024-03-31 [?] CRAN (R 4.4.1)

curl 5.2.2 2024-08-26 [1] RSPM (R 4.4.0)

P digest 0.6.35 2024-03-11 [?] CRAN (R 4.3.1)

P evaluate 0.24.0 2024-06-10 [?] CRAN (R 4.4.0)

P fastmap 1.2.0 2024-05-15 [?] CRAN (R 4.4.0)

glue 1.8.0 2024-09-30 [1] RSPM (R 4.4.0)

P here 1.0.1 2020-12-13 [?] CRAN (R 4.3.0)

P htmltools 0.5.8.1 2024-04-04 [?] CRAN (R 4.3.1)

P htmlwidgets 1.6.4 2023-12-06 [?] CRAN (R 4.3.1)

P httr2 1.0.6 2024-11-04 [?] RSPM

P jsonlite 1.8.8 2023-12-04 [?] CRAN (R 4.3.1)

P knitr 1.47 2024-05-29 [?] CRAN (R 4.4.0)

P lifecycle 1.0.4 2023-11-07 [?] CRAN (R 4.3.1)

P magrittr 2.0.3 2022-03-30 [?] CRAN (R 4.3.0)

P pdftools 3.4.1 2024-09-20 [?] RSPM

P qpdf 1.3.4 2024-10-04 [?] RSPM (R 4.4.0)

P R6 2.5.1 2021-08-19 [?] CRAN (R 4.3.0)

P rappdirs 0.3.3 2021-01-31 [?] CRAN (R 4.3.0)

P Rcpp 1.0.12 2024-01-09 [?] CRAN (R 4.3.1)

renv 1.0.7 2024-04-11 [1] CRAN (R 4.4.0)

P rlang 1.1.4 2024-06-04 [?] CRAN (R 4.3.3)

P rmarkdown 2.27 2024-05-17 [?] CRAN (R 4.4.0)

P rprojroot 2.0.4 2023-11-05 [?] CRAN (R 4.3.1)

P rstudioapi 0.16.0 2024-03-24 [?] CRAN (R 4.3.1)

P sessioninfo 1.2.2 2021-12-06 [?] CRAN (R 4.3.0)

P stringi 1.8.4 2024-05-06 [?] CRAN (R 4.3.1)

P stringr 1.5.1 2023-11-14 [?] CRAN (R 4.3.1)

P tidyllm * 0.2.0 2024-11-07 [?] RSPM

P vctrs 0.6.5 2023-12-01 [?] CRAN (R 4.3.1)

P xfun 0.45 2024-06-16 [?] CRAN (R 4.4.0)

P yaml 2.3.8 2023-12-11 [?] CRAN (R 4.3.1)

[1] /Users/soltoffbc/Projects/info-5001/course-site/renv/library/macos/R-4.4/aarch64-apple-darwin20

[2] /Users/soltoffbc/Library/Caches/org.R-project.R/R/renv/sandbox/macos/R-4.4/aarch64-apple-darwin20/f7156815

P ── Loaded and on-disk path mismatch.

──────────────────────────────────────────────────────────────────────────────