Programmatically interacting with LLMs

Load packages

Set up API key

If you have not already completed the pre-class preparation to set up your API key, do this now.

Your turn: Run Sys.getenv("OPENAI_API_KEY") from your console to ensure your API key is set up correctly.

Basic usage

Your turn: Initiate a basic conversation with the GPT-4o model by asking “What is R programming?” and then follow up with a relevant question..

# add code hereAdding additional inputs

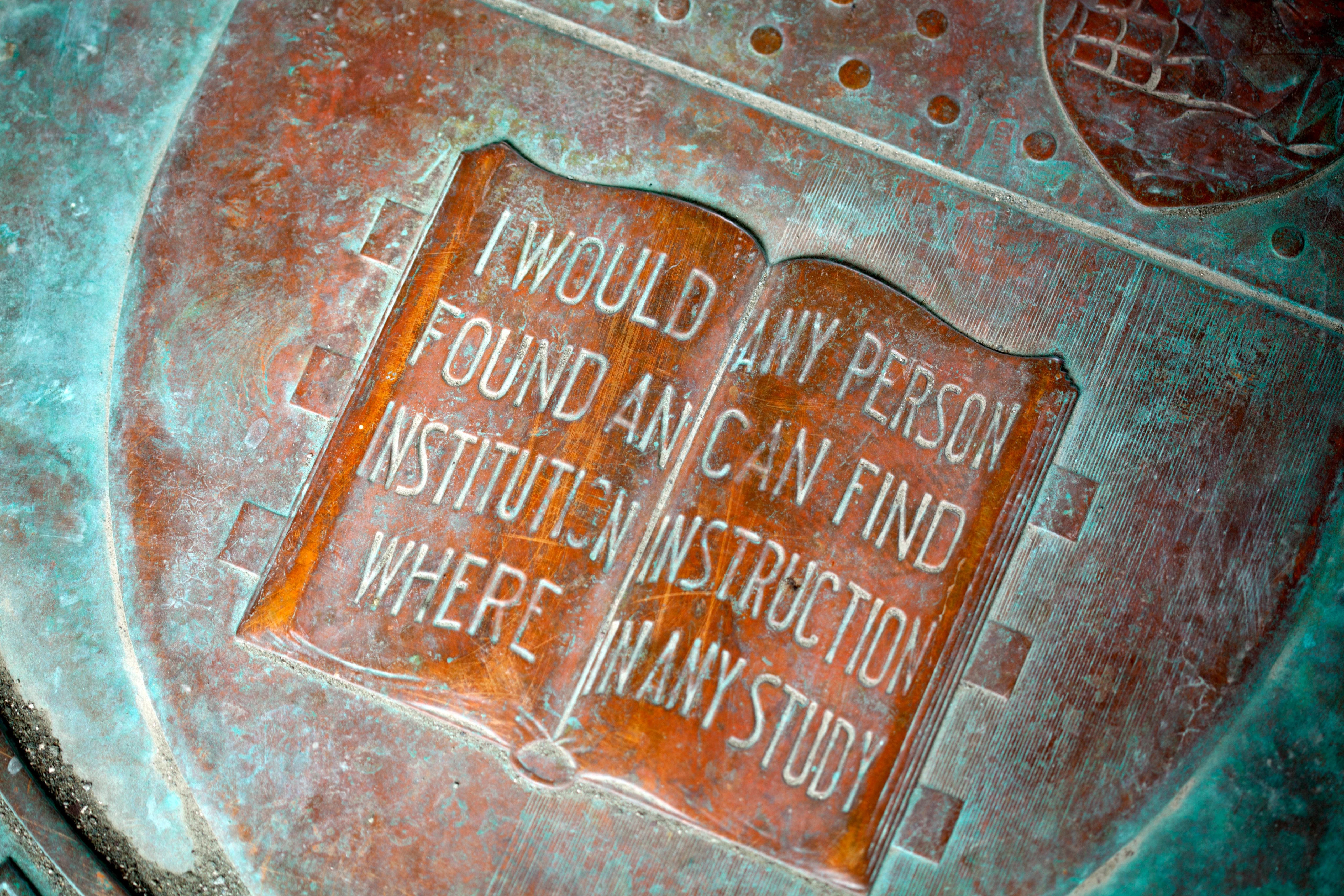

Images

Your turn: Use the documentation for llm_message() to have GPT-4o describe the two images below.

# add code herePDFs

Your turn: Use generative AI to summarize the contents of my doctoral dissertation.

# add code hereAPI parameters

Temperature

Your turn: Use GPT-4o to create a knock knock joke. Generate separate conversations using the same prompt and vary the temperature setting to see how it affects the output.

For GPT-4o, the temperature parameter controls the randomness of the output. A low temperature will result in more deterministic responses, while a high temperature will result in more random responses. It ranges from \([0, 2]\) with a default value of 1.

# default value is 1

llm_message("Create a knock knock joke about dinosaurs that would amuse my 8 year old child.") |>

openai(.temperature = NULL)Message History:

system: You are a helpful assistant

--------------------------------------------------------------

user: Create a knock knock joke about dinosaurs that would amuse my 8 year old child.

--------------------------------------------------------------

assistant: Sure! Here's a fun dinosaur-themed knock-knock joke for your child:

**You:** Knock, knock!

**Your Child:** Who’s there?

**You:** Dino.

**Your Child:** Dino who?

**You:** D'you know any other jokes? Because this one is T-Rex-cellent!

--------------------------------------------------------------# add code hereSystem prompt

Your turn: Write a system prompt for an R tutor chatbot. The chatbot will be deployed for INFO 2950 or INFO 5001 to assistant students in meeting the learning objectives for the courses. It should behave similar to a human TA in that it supports students without providing direct answers to assignments or exams. Test your new system prompt on the student prompts below and evaluate the responses it produces.

You can modify the system prompt in llm_message() using the .system_prompt argument.

percentage_prompt <- "How do I format my axis labels as percentages?"

diamonds_prompt <- "Fix this code for me:

``` r

library(tidyverse)

count(diamonds, colour)

#> Error in `count()`:

#> ! Must group by variables found in `.data`.

#> ✖ Column `colour` is not found.

```"tutor_prompt <- "TODO"

# add code here